PDS-DPO

Multimodal Preference Data Synthetic Alignment with Reward Model

Abstract

Multimodal large language models (MLLMs) have significantly advanced tasks like caption generation and visual question answering by integrating visual and textual data. However, they sometimes produce misleading or hallucinate content due to discrepancies between their pre-training data and real user prompts. Existing approaches using Direct Preference Optimization (DPO) in vision-language tasks often rely on strong models like GPT-4 or CLIP to determine positive and negative responses. Here, we propose a new DPO variant that leverages synthetic data from generative and reward models as proxies for human preferences to improve MLLM alignment efficiently. The resulting DPO dataset, ranging from 2K to 9K image-text pairs, was evaluated on LLaVA-v1.5-7B, where our approach demonstrated substantial improvements in both the trustworthiness and reasoning capabilities of the base model across multiple hallucination and vision-language benchmark. The experiment results indicate that integrating selected synthetic data, such as from generative and rewards models can effectively reduce reliance on human-annotated data while enhancing MLLMs' alignment capability, offering a scalable solution for safer deployment.

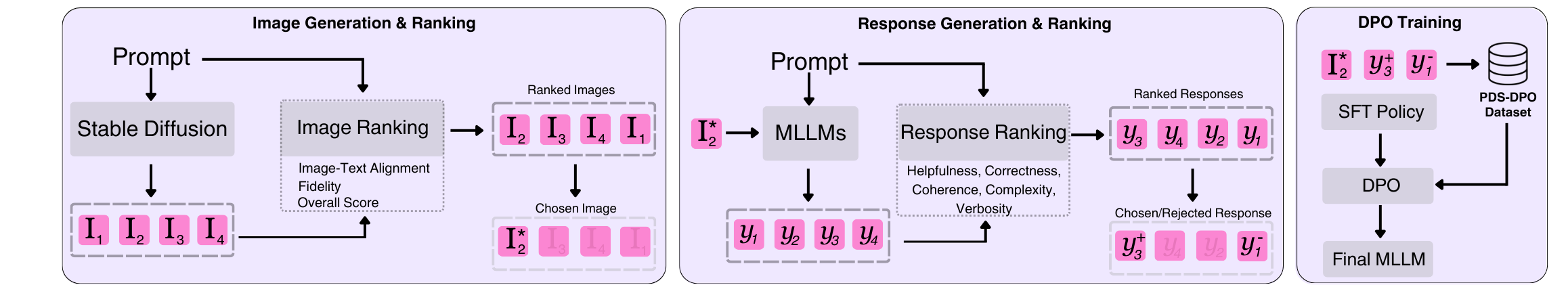

Method

The proposed PDS-DPO framework:

Highlights

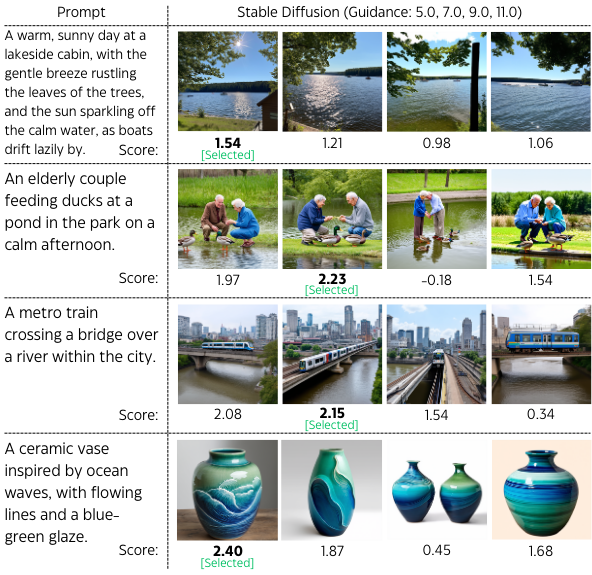

Our framework generates multiple images using Stable Diffusion and retains only the one with the highest scalar score as determined by the reward model:

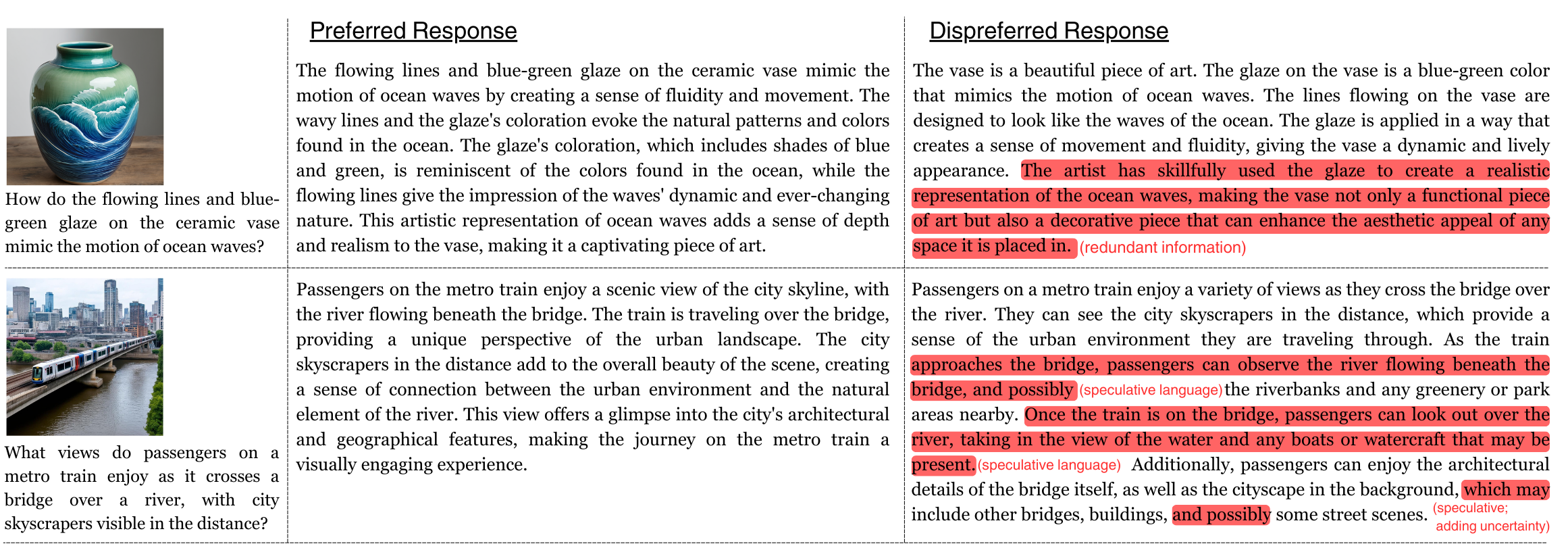

Similar to the images, we rank the generated responses from open-source MLLMs and retain only the one that is preferred:

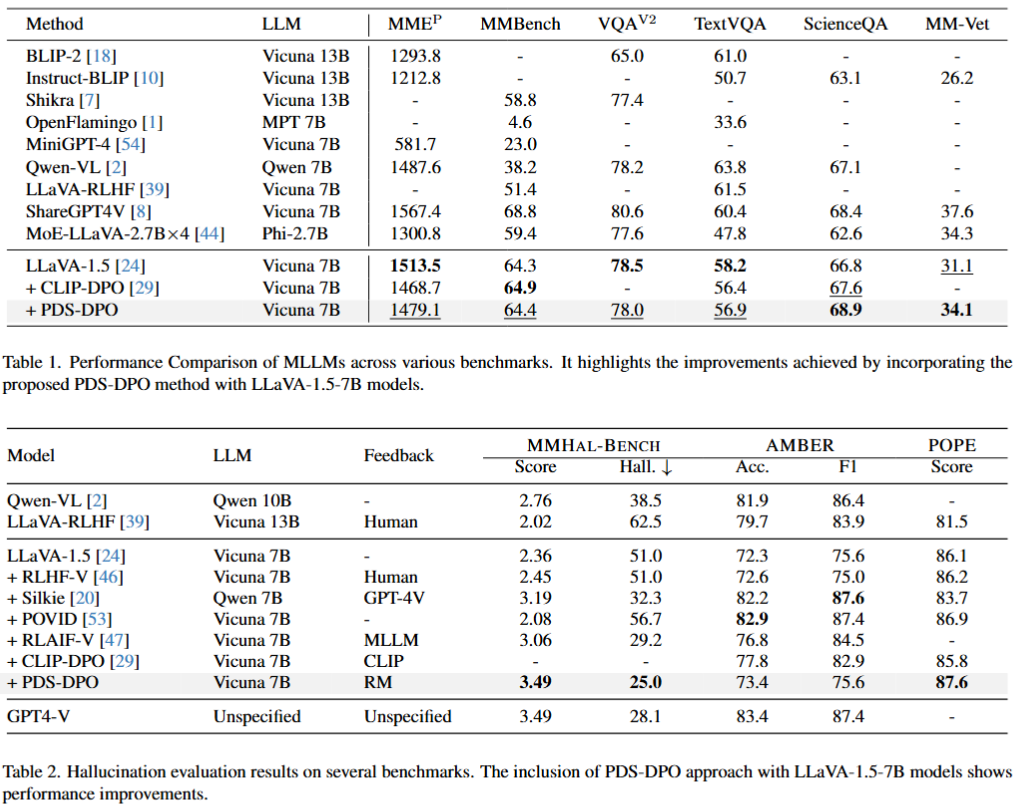

Competitive results on both vision-language and hallucination task benchmarks:

Citation

@article{wijaya2024multimodal,

title={Multimodal Preference Data Synthetic Alignment with Reward Model},

author={Wijaya, Robert and Nguyen, Ngoc-Bao and Cheung, Ngai-Man},

journal={arXiv preprint arXiv:2412.17417},

year={2024}

}

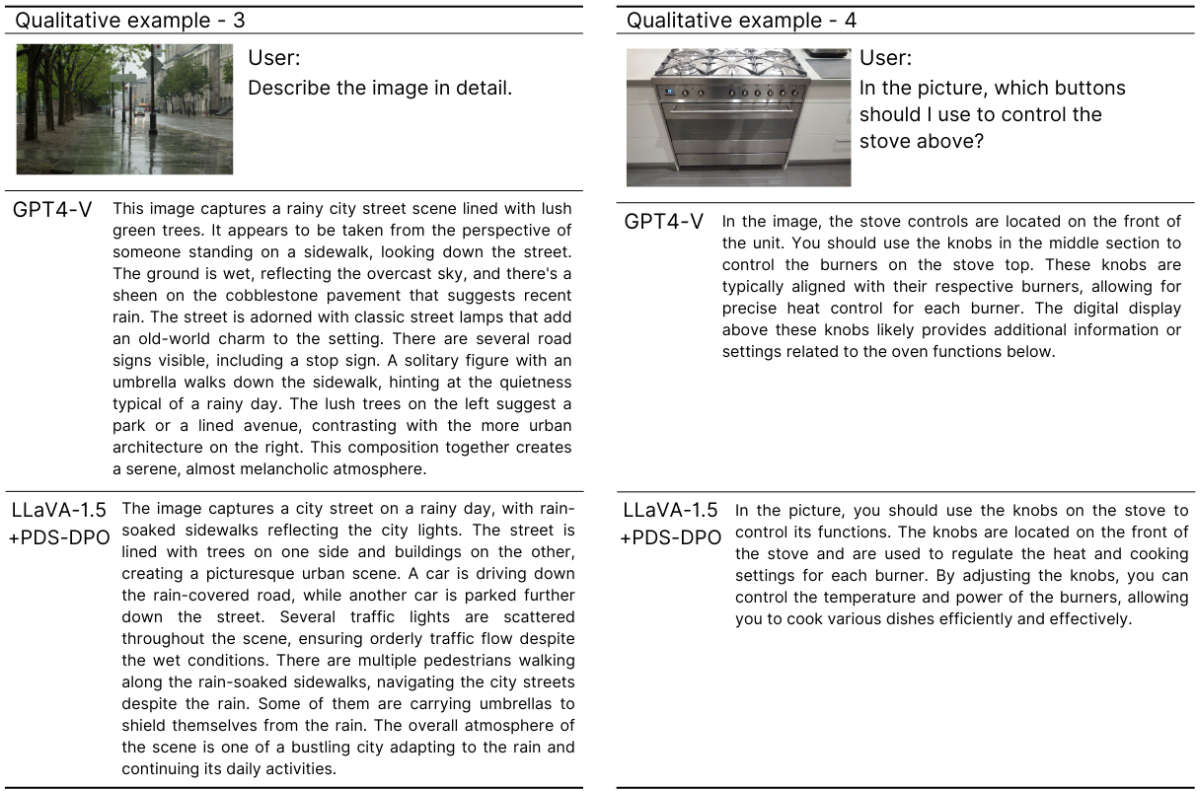

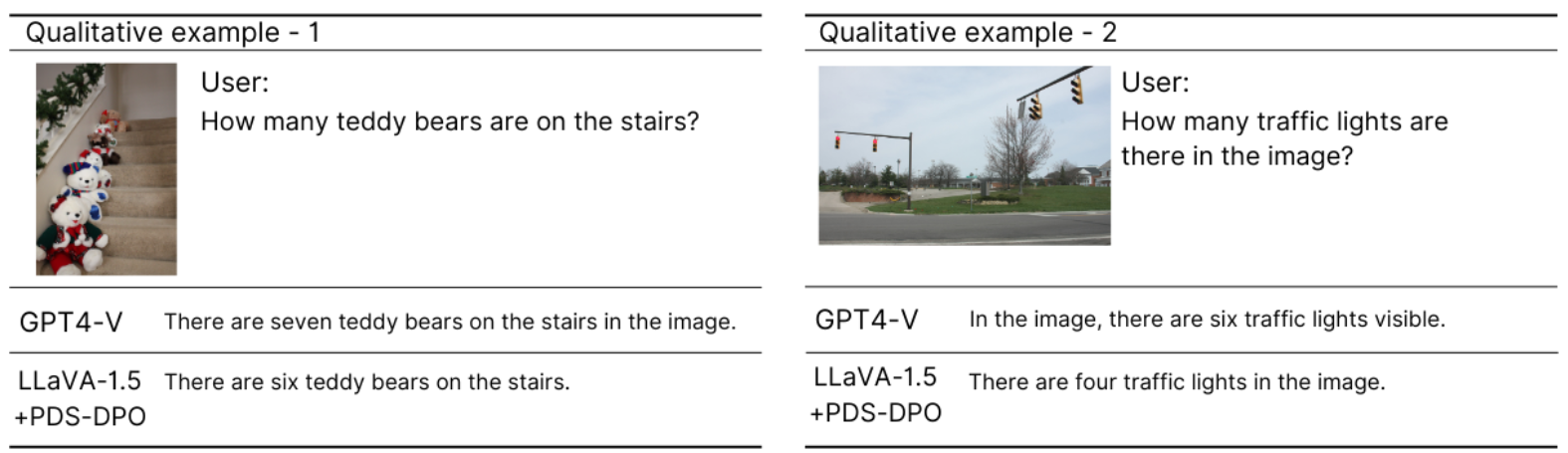

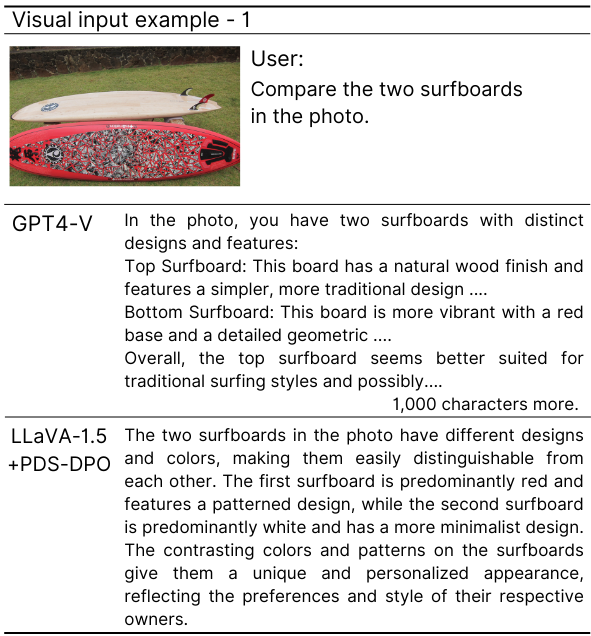

Examples

- Short-form QA: PDS-DPO can give a more trustworthy answer in short-form QA.

- Long-form QA: PDS-DPO can generate more concise but informative answer.

- Long-form QA: PDS-DPO can provide image description with detailed reasoning and less hallucinations.